Streaming Analytics Platforms

Last Updated:

Analyst Coverage: Philip Howard and Daniel Howard

“Streaming” is the ability to move large quantities of information from a source or sources to one or more targets, in real-time. For example, within the world of media, live streaming platforms are commonplace. Similarly, streaming platforms provide a comparable service for IT. For example, moving sensor data from its origins and delivering that information to a relevant location for processing.

While all stream processing platforms have some ability to manipulate data while it is in-stream, streaming analytic platforms add to this functionality by providing analytic capabilities. These include, but are not limited to, deploying machine and deep learning algorithms as streams are being processed, for example for real-time fraud detection. In this sense streaming analytic platforms are an evolution of what was historically known as “complex event processing” where a “complex event” is an event (or pattern) that is derived from two or more originating events. The more generic term “event stream processing” is sometimes used to encompass this space. Whatever you call it, the key concept is that streamed “events” are queried (processed) prior to their being persisted within a database or, in some cases, without it ever being so stored, or it being stored only in aggregated form.

Streaming analytics platforms differ from pure streaming products in that the latter are about the movement of data, though they may support the deployment of analytics on top of their capabilities, while streaming analytics platforms have built-in capabilities designed to support analytics, which may extend not just to the deployment of machine learning but also the training of relevant algorithms. Most typically, the data being processed consists of sensor data, stock ticks or other classical “events” such as log data. These platforms are often an evolution of complex event processing offerings. Whereas the latter were targeted primarily at algorithmic trading and similar environments within capital markets, what has happened over the last few years is that the technology has become more oriented towards analytic processing, especially in the light of big data and the Internet of Things.

Traditional query techniques involve storing the data and then running a query against that data. However, the process of ingesting and then storing the data takes time and streaming analytics overcomes this by having the data pass through a query or algorithm during the ingestion process, meaning that the process of persistence no longer impedes processing performance. That said, the introduction of in-memory databases has mitigated this effect and the use of streaming products such as Kafka and Flink in conjunction with in-memory database processing represents a viable alternative to streaming analytics platforms.

Note, however, that it is not always as simple as just passing the data through a query. It may be more a question of pattern recognition whereby a series of events are correlated and together meet or fail to meet an expected pattern. For example, credit card fraud detection is a common use for streaming analytics.

Streaming analytic platforms provide environments that offer suitable performance to process anything from tens of thousands of events per second to millions or tens of millions per second, depending on the platform and the use case. Latency is typically measured in milliseconds if not microseconds.

There are lots of potential use cases in telecommunications, smart meters and monitoring applications of various sorts, predictive maintenance, fraud detection and so on, so it is often of interest to governance and control departments. Some solutions in this space (those that can store data) may be able to support functions such as real-time trending, which will be useful in some environments.

For a long time, complex event processing was looking for significant numbers of use cases outside capital markets. The advent of big data and the Internet of Things has provided just such opportunities. Thus the main trend in the market is actually the shift away from complex event processing and towards streaming analytics.

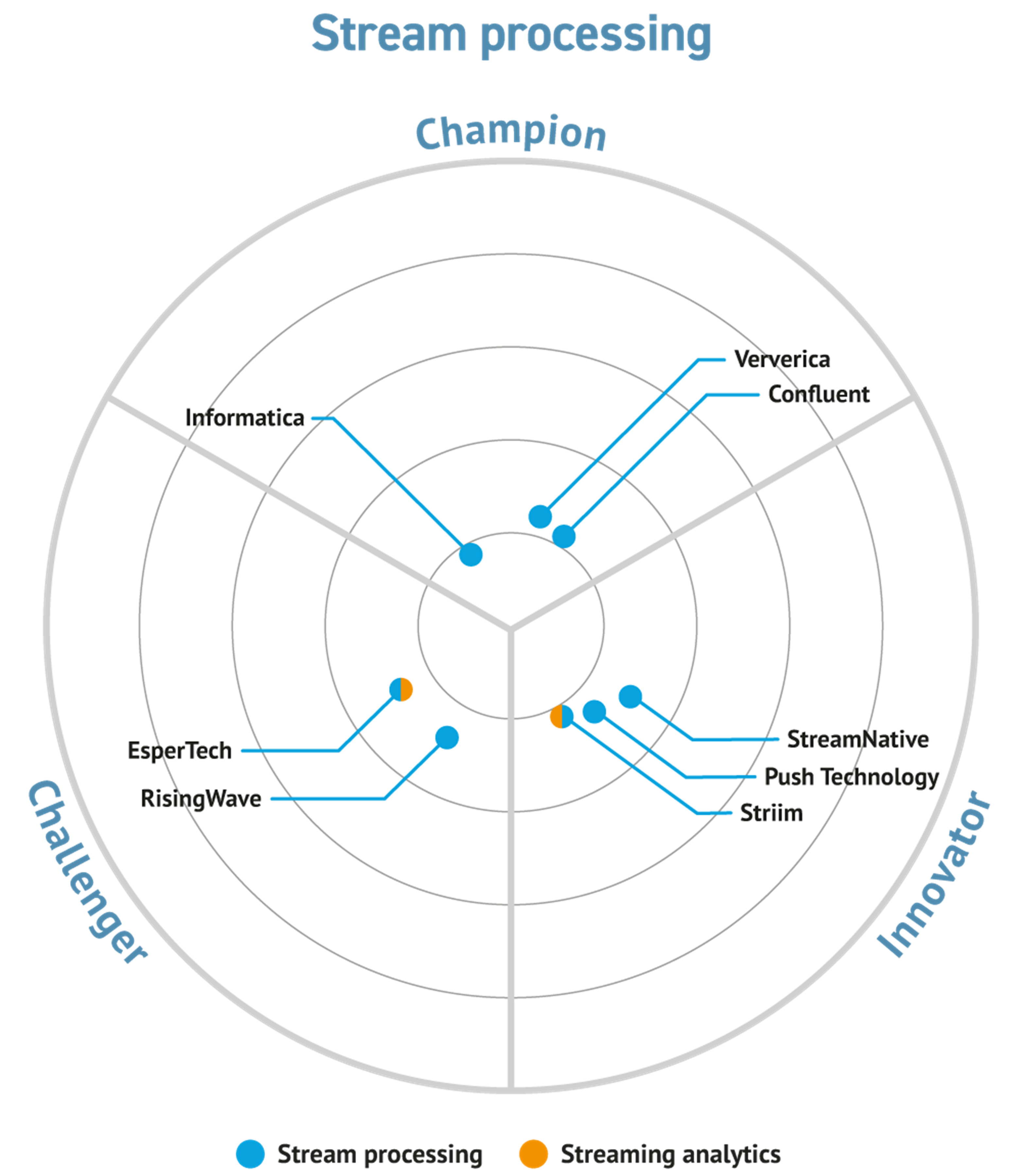

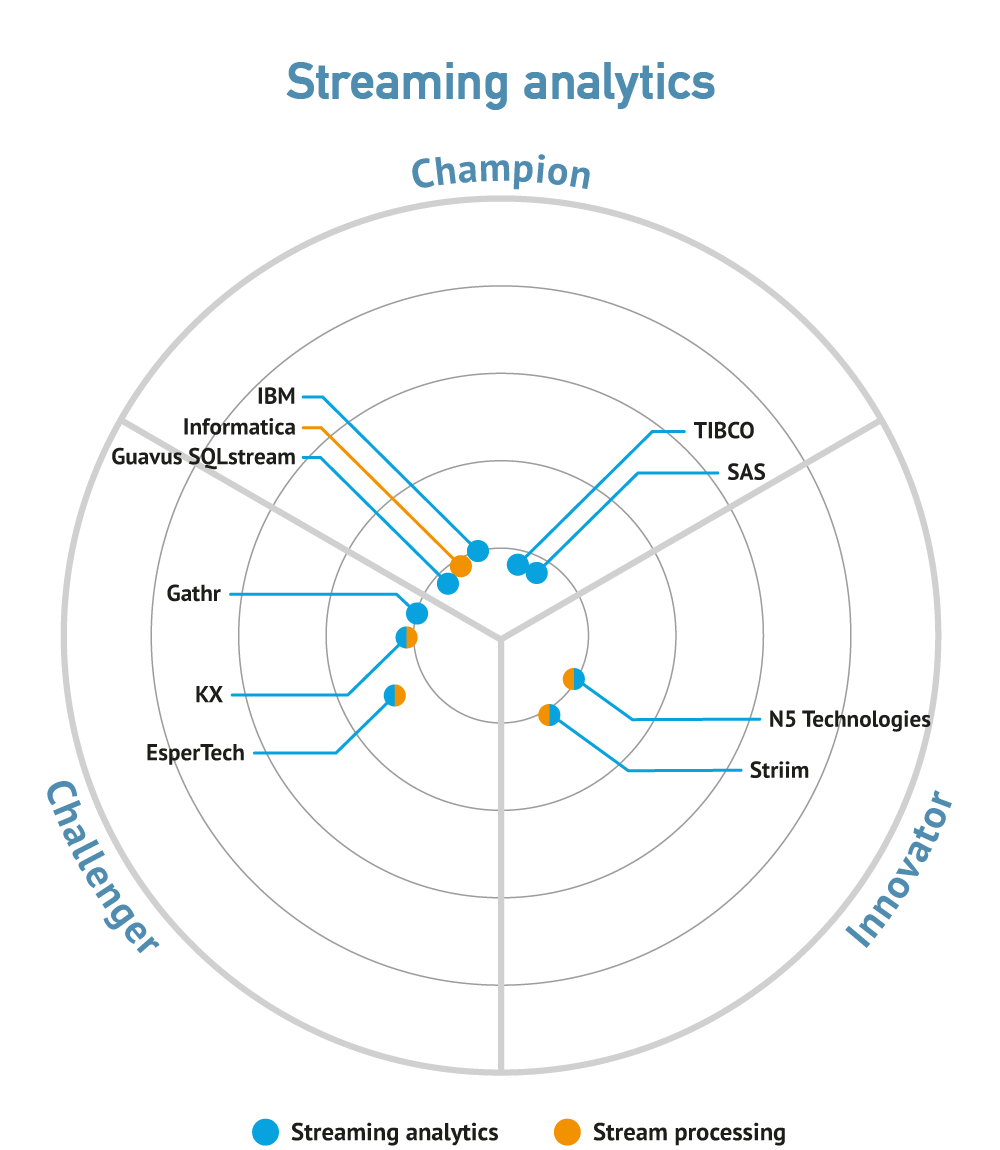

From a technical perspective the market consists of two groups of products: streaming platforms and streaming analytics platforms. The former are typically based on Apache open source projects, whereas the latter are mostly commercial developments of products that were originally targeted at complex event processing. Thus the open source projects tend to be less mature in their support for analytics and this is where most new development is taking place. Specifically, these open source streaming platforms are adding storage (click here for a more detailed discussion on this topic) and moving towards merging batch and real-time query capabilities.

There are multiple open source streaming products, supported by a variety of vendors, most notably Kafka (Confluent), Flink (previously data Artisans, now Viverica) and Spark Streaming (Databricks), though this category also includes suppliers such as Lightbend, Streamsets and Streamlio. As mentioned, these will often support third-party analytics. It is also worth noting Cloudera’s support for NiFi (and MiNiFi), which are used for streaming data from edge devices within an Internet of Things context.

Streaming analytics vendors tend to be more old school with major vendors involved such as SAS, Oracle, IBM, Microsoft, Cisco, TIBCO, Amazon and Software AG though some of these have less functional offerings than others. The most notable developments within this area over the last few months have been the demise of DataTorrent and the acquisition of SQLStream by Guavus. data Artisans has changed its name to Viverica and been acquired by Alibaba.

Downloads

- Leveraging Data Management Partnerships for Enhanced Supply Chain Solutions with InterSystems Supply Chain Orchestrator

- AI and Generative AI within an Enterprise Information Architecture - Solix and The Operating System for the Enterprise

- Data Fabric and the Future of Data Management - Solix Technologies and The Data Layer

- Financial Trading Technology and RUMI from N5

- Diffusion Intelligent Data Platform