Outcome Automation

Analyst Coverage:

Outcome Automation is based on Design Thinking, Digital Twin, Stakeholder Experience and Code Components (including low code/no code, Business Process Management, Rules Processing, SaaS components and even Enterprise Apps such as Zoho or Salesforce). It automates the delivery of mutable business outcomes. IT produces Insights from Analytics, AI etc. and some of these Insights are Actionable (that is, worth spending time on). Outcome Automation is the feedback-controlled process by which Actionable Insights are transformed (using quality assurance processes such as DevOps) into automated production systems delivering Business Outcomes. These Business Outcomes are monitored and measured and the results fed back (often manually but, better, automatically, using AI (Augmented Intelligence) and ML (Machine Learning) systems) as new Actionable Insights. Outcome Automation is continuous improvement driving the evolution of a Mutable Business, delivering outcomes that delight the customer.

Outcome Automation is not, primarily, a technology one can just buy. If Alice Rawsthorn can say that “design is an attitude”, Outcome Automation is an attitude too, based on Design Thinking. It is an attitude, based on an enabling culture and enabling platform, across a whole company, that provides a capability for automating business actions effectively. See also this IDEO U discussion.

An essential guiding concept here is Digital Twin.

A Digital Twin is the ultimate expression of Outcome Automation. In other words, it is business-as-code with as much of the business function as possible automated using bots or actual robots. Taxonomy and what and how you describe things (in detail) are incredibly important. Consistency and clean/accurate data will rule.”

Paul Bevan (Research Director: IT Infrastructure at Bloor)

Think of Outcome Automation as a journey. The destination is a versioned digital abstraction of a business outcome, the Digital Twin, representing yesterday’s, today’s and tomorrow’s outcomes, together with the corresponding user experiences, and tightly coupled to the codebase/microservices/processes/machines implementing the physical system.

The Digital Twin differs from a 20th century CASE model in that it is about Systems Engineering, not just Software Engineering. It is a single source of truth (with application-specific views into it), a “System of Systems” working model covering any engineering simulations of products and assets and systems (and possibly including, less tightly coupled, human processes) up to the world-facing endpoint. In a real sense, the Digital Twin is the production system, although this will be aspirational for much of the time, for most companies. Potentionally everything can be code (or robots) but the man-machine boundary is mutable, depending on complexity, available skills and available technology.

Implementation of a new version of the business, when planned, is virtually instantaneous, because visualisation of the outcome and authentication/validation is carried out on the digital twin and the implementation is automated and free of human error.

There are large scale inplementations of Digital Twin for complex systems already, such as that in Singapore.

Moving down a level, it means taking the things that an organisation is doing, or wants to do, and doing them more effectively (often, more quickly; and/or more consistently) by offloading at least some of the work involved to a machine (or machines), without significantly reducing the quality of the output. This implies, of course, that the organisation is mature enough to be capable of measuring and managing quality.

It’s also important to note that this automation does not have to be complete, to be considered Outcome Automation. Automation augments human processes (in fact, complete automation, even with AI is impossible – the Turing Halting Problem and Gödel’s Incompleteness Theorem provide limits). This is worth repeating: Outcome Automation is a collaboration between Person and Machine.

As Outcome Automation aspires to Digital Twin, which came out of visualisation of complex, safety critical systems, visualisation is a key part of Outcome Automation. Early Digital Twins were engineering simulations (think, aero engine performance, data centre airflows etc.), with, increasingly, the simulated outcomes being fed back into the performance of the physical environment in near real-time (the best example is probably in F1 Racing). Purely thinking of Digital Twin in terms of visualisation could be a barrier to broader understanding and acceptance of the concept – but, the whole area of Business Process Optimisation and Business Process Automation should to be part of the digital twin story.

Nevertheless, start small and grow Outcome Automation, as the sophistication of your experience with Outcome Automation increases. There are now plenty of examples where systems detect an issue and fire of an instruction that is enacted automatically. For example, re-booting a server where there is simple problem like a cache that needs clearing. The automation clears the cache and reboots the server.

So, rules based automation is necessary for digital twins and at some point AI/ML based automation – in addition to visualisation. At the moment, the visualisation is predominantly a 2D (diagrammatic) representation of the infrastructure; but it could easily be a 3D image (photo) of the actual server rack, where a particular component flashes red and you click on it to see what the problem is.

In this event-oriented environment, low- and no-code approaches, together with graph and time series databases, will be appropriate. Take physical assets, for example: if you describe them accurately and declare what their purpose and function are and list their relationships, then very little extra code should be needed to make them perform their task.

Of course, trust, security and governance will take on greater significance as you hand over more and more functional automation to the Digital Twin. Greater discipline and better process will be needed, compared to that needed for a manual system – but things will run faster and more reliably than before and the business will be more Mutable.

The scope of Outcome Automation is, potentially, very wide. For example, one could argue that data governance is a process that can be automated, but it is more convenient to include it in Data Quality Assurance (or sensitive data management) rather than in Outcome Automation.

Nevertheless, we must be careful to avoid introducing new technology silos here. There are probably, for instance, people using an effective tool for business process automation and a less effective tool for test automation – even though the first tool could easily do both jobs. Test automation is clearly an Outcome Automation thing, but it’s also a thing we’re primarily covering elsewhere, under testing. This doesn’t mean that it necessarily needs special tools labelled “testing automation” – and using general tools might well be more effective, as those building and using the automation, as well as those testing it, will be familiar with the tool.

Clearly there’s no “universal automation technology” answer here – if nothing else, how you automate will depend on what you’re automating.

Some companies are looking to OSS (Open Source Software) community models do develop a partner ecosystem, somewhat independent of the original vendor/developer, to facilitate delivery of Outcome Automation. The ecosystem reduces risk associated with a single point of failure – the vendor, or sponsoring organisation, and its strategic plans – and encourages innovation by developers not necessarily following the original sponsor’s script.

That said, it is probably always useful to start with Design Thinking: a

Non-linear, iterative process which seeks to understand users, challenge assumptions, redefine problems and create innovative solutions to prototype and test.”

Unless you have a good idea of the business outcome you wish to achieve, from the the point-of-view of each and every stakeholder, what you automate may not result in what the organisation wants and/or needs.

These days, one will probably want to adopt an Agile approach and work at a higher level of abstraction than is possible with traditional coding platforms such as C++, Java and the like. Ideally, one wants to declare a business outcome, in business terms, and have the platform automation fill in the execution details that will deliver this outcome:

This is efficient, because a single declarative statement can generate a lot of conventional low-level code;

- Developers working at this higher level are adding value by dealing with business issues and solving business problems, rather than just wrestling with coding errors;

- It opens the possibility of end-user computing by “citizen developers” (business analysts or architects) who are closer to business issues and best placed to support the “mutable business”;

- It makes visualisation of “what the automation does” easier, which make refactoring the automation (i.e., trying new ways of doing automated business) at the business level easier;

- Defect removal is easier, because business users can describe successful or failed business outcomes at the business level, using business language, which should reduce the possibility of misunderstandings between developers and the business.

It is a good idea to map out a high level view of where you are going and what tools you expect to use. An example of such a view is:

There are companies marketing Digital Twin software engineering solutions – such as GE and Siemens. Then, various tools actually implement Outcome Automation – that is, they provide the largely pre-written codebase needed. These include (purely in alphabetical order):

- Blockchain, which is a technology enabling Distributed Ledger – an unalterable record of transactions managed on a peer-to peer basis without central control.

- Event-driven Outcome Automation is an emerging area of interest. Events (such as a payment being made) and corresponding Business Rules triggered (a Rules Engine executes and manages the Business Rules). One might see both of these as subsets of RPA; but people mostly don’t.

- IOT Automation: The automation of actions in real-time overlaps with Event-driven Outcome Automation, and has special application in the world of IOT. This is a specialist area and a developing interest in Bloor.

- Low/No Code – the goal here is to automate development using code generation, large-scale code reuse and/or componentisation. By “No Code” we mean no or little low-level computer code, as a set of high-level declarative statements are code of a sort. In general, there’s usually going to be a point where this type of software hits a wall and has to resort to actual low level coding (for interfaces with existing legacy systems, possibly) but that exemplary products in the space will be aiming to push that wall as far away as possible (ideally to the point where no one is likely to reach it, as a “Low/No code environment” is unlikely to retain low-level coding skills). There is also the possible issue that “clever” unmaintainable, non-obvious declarative “code” is often more dangerous than a branch to good quality conventional code (in part because because a line of bad declarative code can generate a lot of damage). In an effective Low/No code environment resorting to code should be discouraged but the decision to use code or not should in the hands of the organisation, not of the tool designers.

- Microservices platforms are not traditionally thought of as automation, but in many ways do the same thing as componentisation in Low/No code development. They are at a lower level but are still focused around enabling a “write once, use often” paradigm. It’s not unreasonable to think of Low/No Code as an extension of the microservices model. Similarly, containerisation and the orchestration of containers, offers a powerful capability for Outcome Automation and is a big feature of some open-source software development environments.

- Modularisation/Componentisation. Following on from the above, a large part of the Low/No Code paradigm is representing discrete bits of functionality as components, or modules. In many Low/No Code frameworks some of these are provided out of the box, and users are given the tools to create their own. More to the point, these are not solely the purview of Low/No Code. For example, it’s common for Cloud providers to offer what are effectively components of their own that you can plug into an organisation’s Cloud apps. Again, this is not dissimilar to the microservices model – with the exception that the services in question have been built by a (presumably) trusted external partner. This is also reminiscent of the “component marketplaces” offered by several Low/No Code vendors; and of the API Economy, where automated business components are orchestrated through their APIs.

- Process Mining (the use of a discovery tool to find the business processes actually in use in an organisation) is a complementary area, although not necessarily one we’ll want to cover in detail. It links with Business Process Automation (in essence, build a BPMN or UML model of the business processes in use and execute it).

- Robotic Process Automation (RPA), which is fairly self-explanatory: one can automate one’s processes using software robots and some vendors regard their RPA tools as, in effect, Low/No Code tools. RPA is often used for automating operational processes and high-level business processes at the “job” level – and, in a silo’d organisation, by people outside of the IT group. Consider two, equally valuable, use cases here:

- Simplifying the actions users need to take in order to do their job (Assisted RPA; i.e. you push a software button to do something rather than having to do the process manually);

- Unassisted or Autonomous RPA where processes just happen automatically without requiring any user input at all.

- Test Design Automation. Given that we are going to cover this under different headings (such as Application Assurance) this is still worth mentioning, if just because there is currently a lot of crossover between RPA and TDA. In fact, some TDA vendors are putting out RPA tools which are, essentially, their TDA tools “with the serial numbers filed off”. For clarification, we are specifically referring to the sort of model-based testing that uses a flowchart or other system model to automatically create tests, when we talk of “Test Design Automation” here.

Vendors will sell integrated tool suites, which will make building Digital Twins and Outcome Automation easier. Nevertheless, be careful, as tying one’s initial Digital Twin (say) too tightly to a particular vendor’s Digital Twin application might hinder you from ever approaching closer to the aspirational endpoint – this would be the ultimate vendor lock-in.

Many of the approaches to Outcome Automation we’ve listed here overlap; and many are quite similar in what they achieve, although they use different terminology. This suggests that there is an underlying structure which all of them are evolving towards:

- Componentisation of automated business systems as microservices; and

- The use of a model of the business (ultimately, a Digital Twin) to automatically generate and drive actions against these components.

This is perhaps just one possible paradigm for Outcome Automation, but it appears to be an effective one.

Further, it seems that the provision of component marketplaces is important, in practice; not only to avoid lock-in to an Outcome Automation vendor – with the ever-present danger that the vendor is bought by someone one would rather not do business with – but also so as to be seen to avoid lock-in.

Outcome Automation is the strategic focus of any Mutable Business, using information technology (IT) to support constant evolution in response to a changing business environment. As such, everybody engages in producing or using IT should care about Outcome Automation. However, it will be of primary interest to the C-level strategic managers of a Mutable organisation.

Outcome Automation and Mutable

There’s no point building something quickly if it’s stale and useless within a week (although there can be an advantage to building something quickly to exploit, say, a short term arbitrage opportunity, which makes money and then disappears). At the same time, maintenance is often a very significant expense. Therefore, the best Outcome automation solutions will be easily and cheaply maintainable. In fact, many claim to be so productive that it is better to rewrite from scratch than attempt maintenance. We have doubts about this – however productive these platforms may be, regression testing might bite (proving that a replacement app stills performs old functions unchanged, as well as new functions, isn’t trivial); and one risks losing valuable “intellectual property” – business experience – with throw-away apps. Indeed, making maintenance faster and easier will often be one of the major advantages of outcome automation (and in particular of the model-based approach described above). Without automation, maintenance of large, old, complex systems (and their test cases) can become hideously impractical, to the point that organisations will effectively stop maintenance – or, rather, will maintain only what they really can’t avoid maintaining – and leave the rest to rot. Poor maintenance practices can end up costing more overall than doing it properly would have cost, so that one potential benefit of Outcome Automation is that it makes proper (full system) maintenance viable, and more palatable to the business.

The extensibility of an Outcome Automation solution (with APIs that can be available for integration with conventionally coded systems) is also important. This is because, sooner or later, one might reach a point where an Outcome Automation tool can’t support the complexity of at one wants to do with it. This is bad, for obvious reasons, and when/if this happens, one wants an escape hatch, although this escape hatch should only be used when absolutely necessary, because an automation culture will probably lose manual coding skills.

Outcome Automation is about abstraction – working at a higher level. Being able to maintain at higher abstraction layer addresses many maintenance issues: change is more efficient; maintenance is faster; and it is easier to communicate to business stakeholders. This helps Outcome Automation to be truly Mutable. In fact, Outcome Automation is extremely valuable for Mutable because it allows changes to your processes (including testing, development and so on) to be implemented automatically – and hence enables change to propagate through an organisation very quickly.

Outcome Automation and Quality

The maintenance of quality in Outcome Automation is vital because, although desired changes can be implemented at speed across an organisation, a misunderstanding of the business process or regulatory requirements can propagate equally quickly.

The old component-based programming concepts of, for example, Coupling and Cohesion are still important with Outcome Automation. Even with Agile practices and no-code environment, it is still possible to write bad apps, just harder. If a component called “just total up all outstanding balances” also handles, say, opening new accounts, the application will likely be, or become, unmaintainable.

A lot of the detailed assurance of Outcome Automation quality is handled under different headings, such a Data Quality Assurance, Application Quality Assurance and so on. This doesn’t mean that it is not important to Outcome Automation!

Nevertheless, if quality is “fitness for purpose” an important aspect of quality for Outcome Automation is selecting the right tools for the job – which implies that the team using Outcome Automation tools knows what the job is. No matter how seductive the tool evangelists are, the actual users of Outcome Automation must:

- Ensure that the organisation’s infrastructure and culture can support Outcome Automation;

- Determine their own likely needs;

- Determine the capabilities of the tools on offer;

- Carry out a gap analysis, to determine how far short (or not) the various tools fall, with respect to the requirements;

- Commission a “proof of concept” with a development important enough to matter but not so important as to put the organisation out of business if it fails. It is important that the potential customer, not the vendor, is in control of the Proof of Concept;

- Select the tool, or tools, that are not only “fit for purpose” but fit for the organisation’s particular purposes, for further evaluation – looking at support, lifetime cost of ownership, vendor stability, etc.

- If one doesn’t select “fit for purpose” Outcome Automation tools, there is a good chance that the products of Outcome Automation won’t be fit for purpose either.

Outcome Automation is such a wide field that most emerging IT trends have an impact. For example:

- AI – AI is “augmented intelligence” – machine and human working together – and machine learning is increasingly being seen as the only way to control the complexity of modern asynchronous systems; but it needs to be “explainable AI”.

- Consolidation into platform services. As enterprise IT on the asynchronous cloud becomes ever more complex, organisations use architectural models in order to understand this complexity – these models represent strategic Actions. It is important that these models are tightly coupled to the production environment through Outcome Automation. No-code and Low-code development tools help to facilitate this and we see companies such as Siemens acquiring Mendix and Rocket acquiring Uniface as examples of a general mergers and acquisitions trend.

- Digital Twin – this is a concept originating in Systems Engineering for complex, safety-critical projects in, say, the aerospace industry. A 1:1 computer model of an operational system is maintained and all system upgrades, additions, fixes made to, and validated on, this model. The model is then copied (in a controlled manner) to Production, thus minimising or eliminating production downtime.

- Distributed Ledger – (blockchain) – Distributed Ledger is a way of securely, reliably and robustly recording business transaction without (potentially) any need for a trusted third party or government to guarantee the underlying blockchain integrity. It would have applications for facilitating trade between (or across) countries which distrust each other, for example.

- Hybrid Cloud – Cloud is clearly the future, but there will be a place for on-premises cloud (for security, legal or pragmatic convenience) for the foreseeable future. So, purely on-premises solutions will migrate onto cloud; but pure cloud-native solutions will increasingly add on-premises capabilities as an option.

- No code – declarative instead of procedural development.

- Open Source – Companies such as IBM and Microsoft are increasingly moving to Commercial Open Source models for product development

- Pay as you go – There is a general trend towards innovative ways of charging for sofrtware. Rental, “pay as you go” models will become the norm; but they will often be packeaged into fixed price bundles or transferable licence schemes for large enterprises

- Quantum Computing – Quantum computing isn’t that important yet but early adopters (for example, some IBM customers) show that it can automate problem solutions that are intractable with classic computing – such as the design of custom chemical compounds. When, or if, it becomes part of the mainstream, Quantum Computing will extend Outcome Automation significantly (although only in some areas).

- Value Stream management – “A Development value stream includes the people and sequence of actions required to develop solutions that provide business value and are delivered to end users or customers through Operational value streams” – op. cit..

As Outcome Automation is a window into the whole development and analytics space (analytics generates Insights, which lead to requests for action, implemented with Outcome Automation), most large-enterprise-oriented vendors are major players in this space: such as (but not exclusively) IBM, Workday, SAP, ServiceNow and GitHub.

These companies are delivering new platforms and technologies, that facilitate the delivery of Outcome Automation. Digital Twin, for example, has come of age with the first country-scale implementation, in Singapore, facilitated by Bentley Systems. More generally, GE Digital is a major innovator in Digital Twin.

IBM is developing a transformative Blockchain platform based on the open-source Hyperledger Fabric, which includes the necessary governance for an enterprise-friendly shared, decentralized, cryptographically secured, and immutable digital ledger, which addresses the issues of accountability, privacy, scalability and security (including fault-tolerant consensus) facing a business. Enterprise blockchains are permissioned (with policy-based governed access) but not necessarily private.

We think that major analytics and service companies will increasing acquire or develop low-code or no-code development tools, so as to complete their Outcome Automation story. For example (and, again, not exclusively) Siemens acquiring Mendix and Rocket acquiring Uniface.

Another instance of Outlook Automation consolidation is the generation of digital-ai, “an intelligent value-stream management platform”, from Collabnet with the acqisition of VersionOne (for Agile management and collaboration), XebiaLabs (one of the pioneers of Release Orchestration and Continuous Integration/Continuous Delivery) and Arxan Technologies (which provides multi-layer app security).

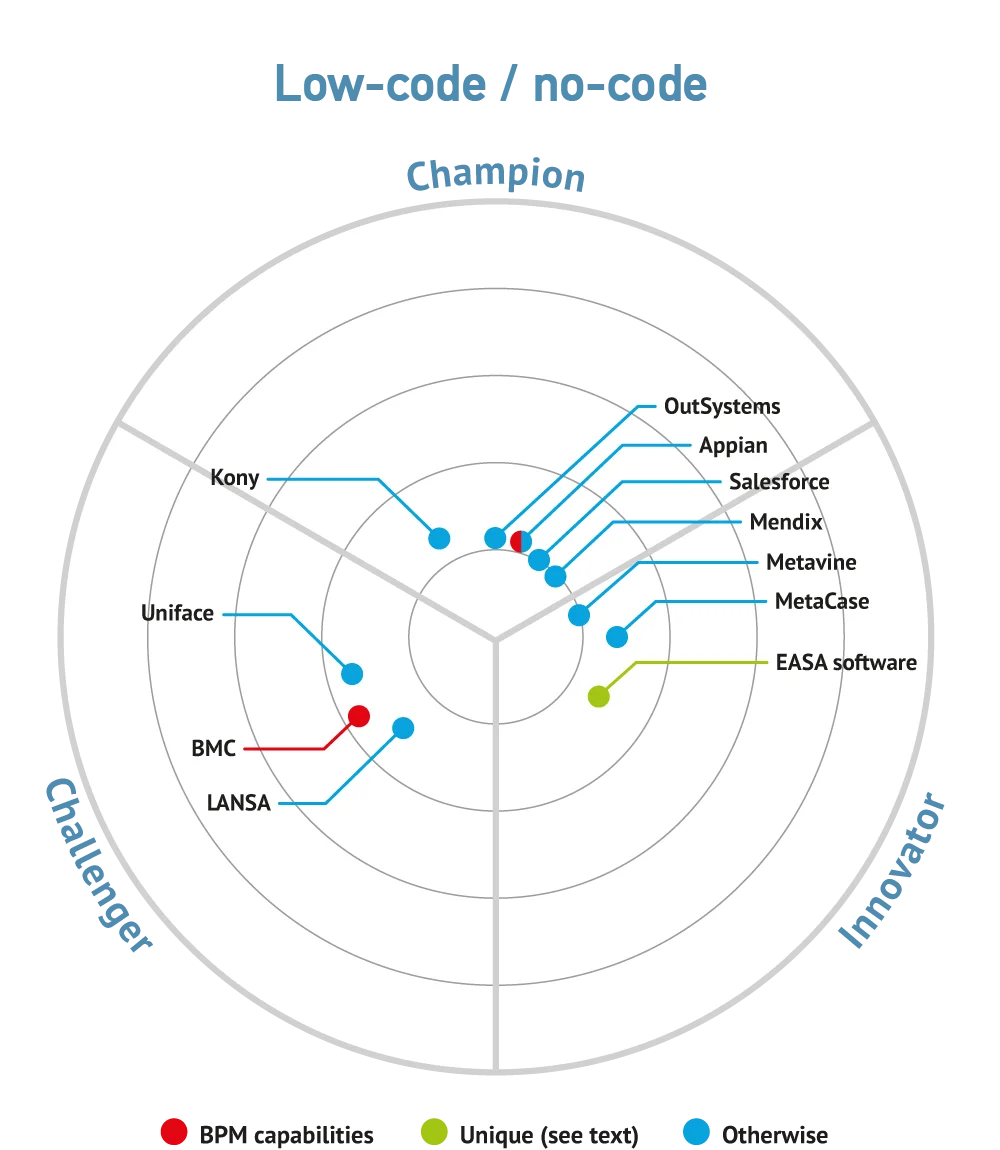

We find that most low-/no-code tools don’t bump into each other much, at the moment. This indicates that the marketplace is still wide open.

Related Blog

No related research found.

Connect with Us

Ready to Get Started

Learn how Bloor Research can support your organization’s journey toward a smarter, more secure future."

Connect with us Join Our Community