N5 Technologies

Last Updated:

Analyst Coverage: Philip Howard and Daniel Howard

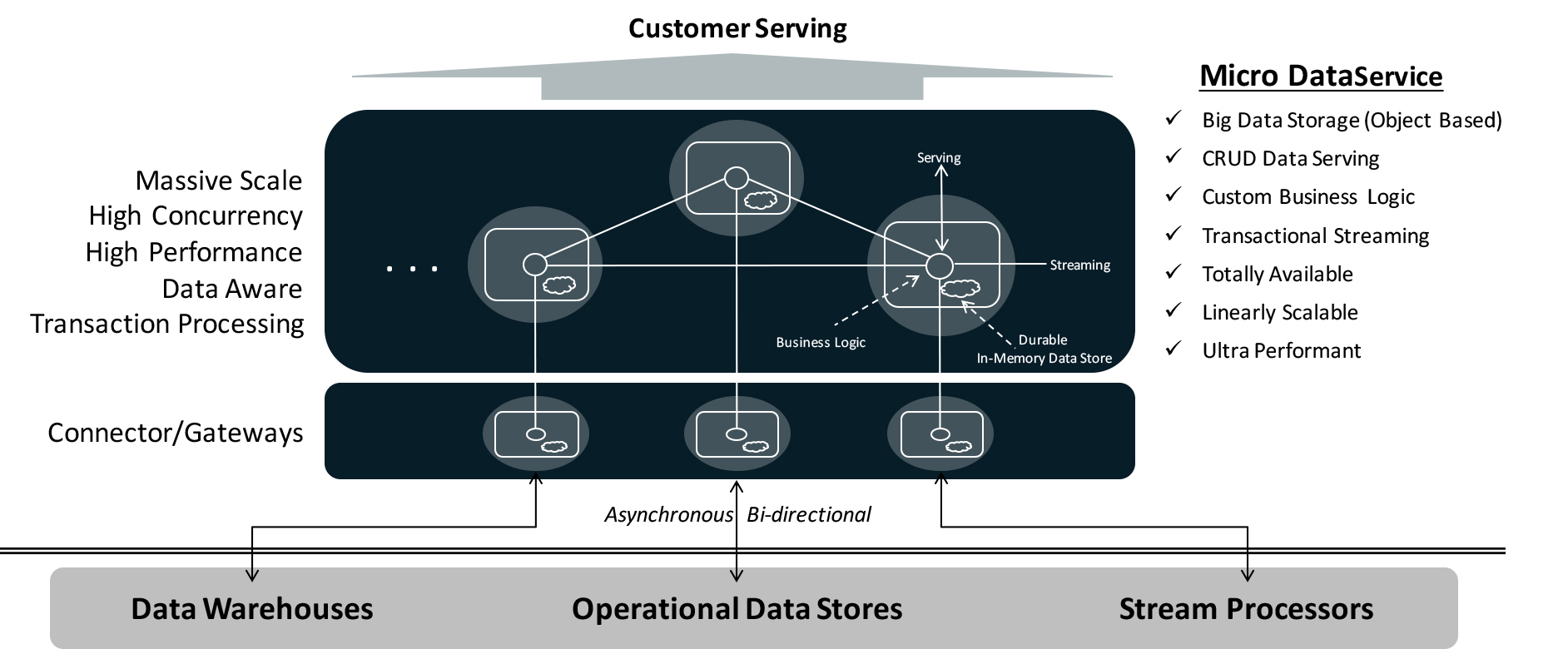

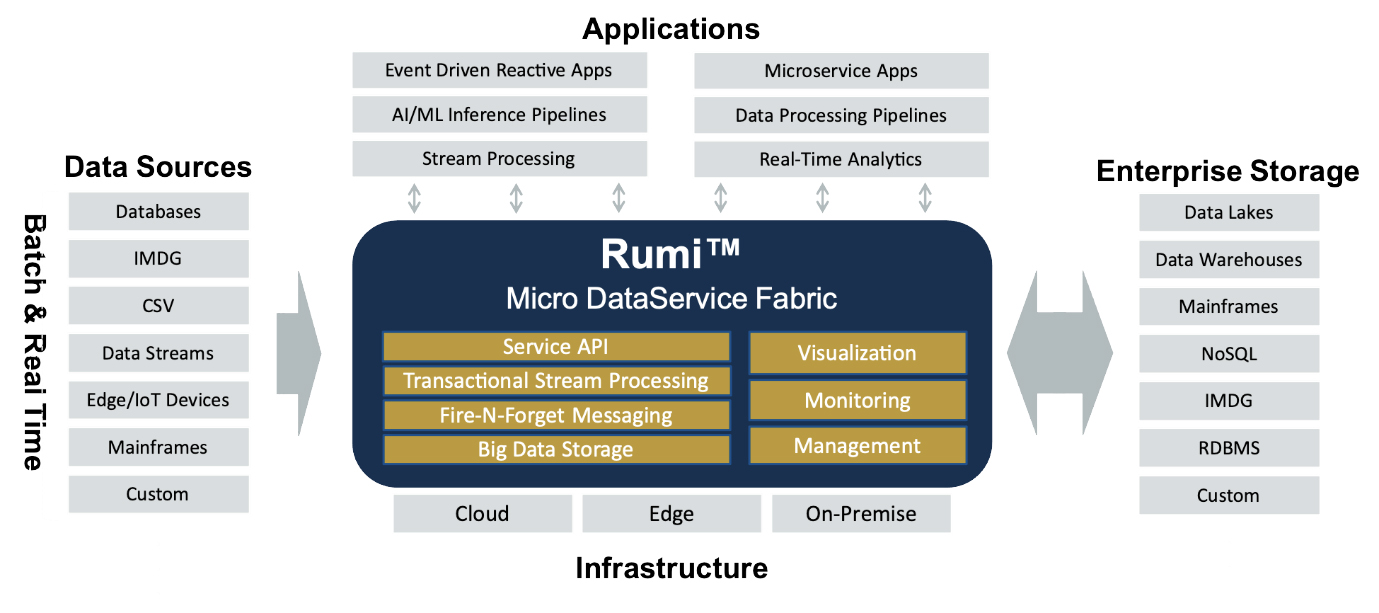

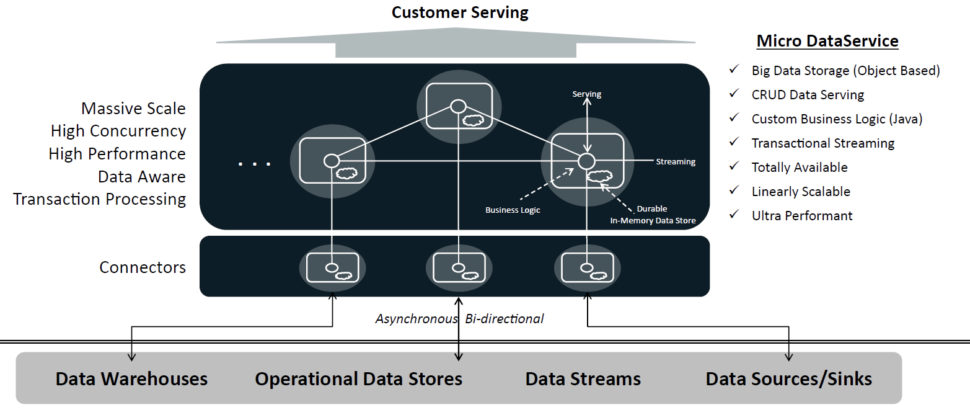

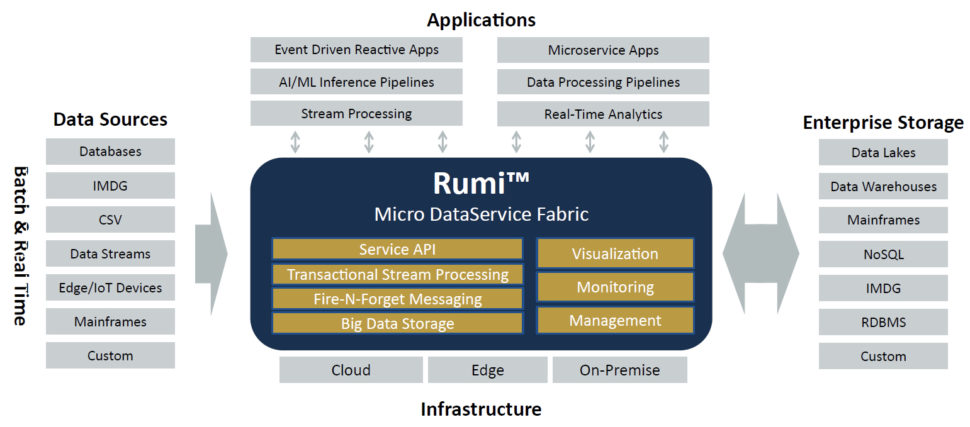

Rumi from N5 Technologies, Inc. addresses the need of modern enterprises to derive business insights in real-time from massive volumes of historical and live data while integrating this capability directly into their customer serving transactional applications. This requirement is discussed in more detail here, which was compiled before N5 came to market with its Rumi product.

N5 and its founders originated from the financial services sector and Rumi was developed to power mission critical, real-time risk management applications and ultra-low latency equity trading systems. The company is based in San Jose, California, and, as of the time of this writing it is privately funded. The company’s main route to market is via direct sales. It plans to selectively partner with System Integrators and industry vertical VARs. Product pricing is data-driven and subscription based. Applications can be deployed in public and private clouds, on-premises data centres and edge data centres. It can be configured on virtualized or bare-metal infrastructure. The current offering supports Java based applications and other programming language support is on the roadmap.