Vaticle

Last Updated:

Analyst Coverage: Daniel Howard and Philip Howard

Vaticle, formerly Grakn Labs, is a British company that came to market in October 2016. Its flagship product, TypeDB (previously Grakn), is open source and free to download, although the company also offers commercial licensing and enterprise support at a premium.

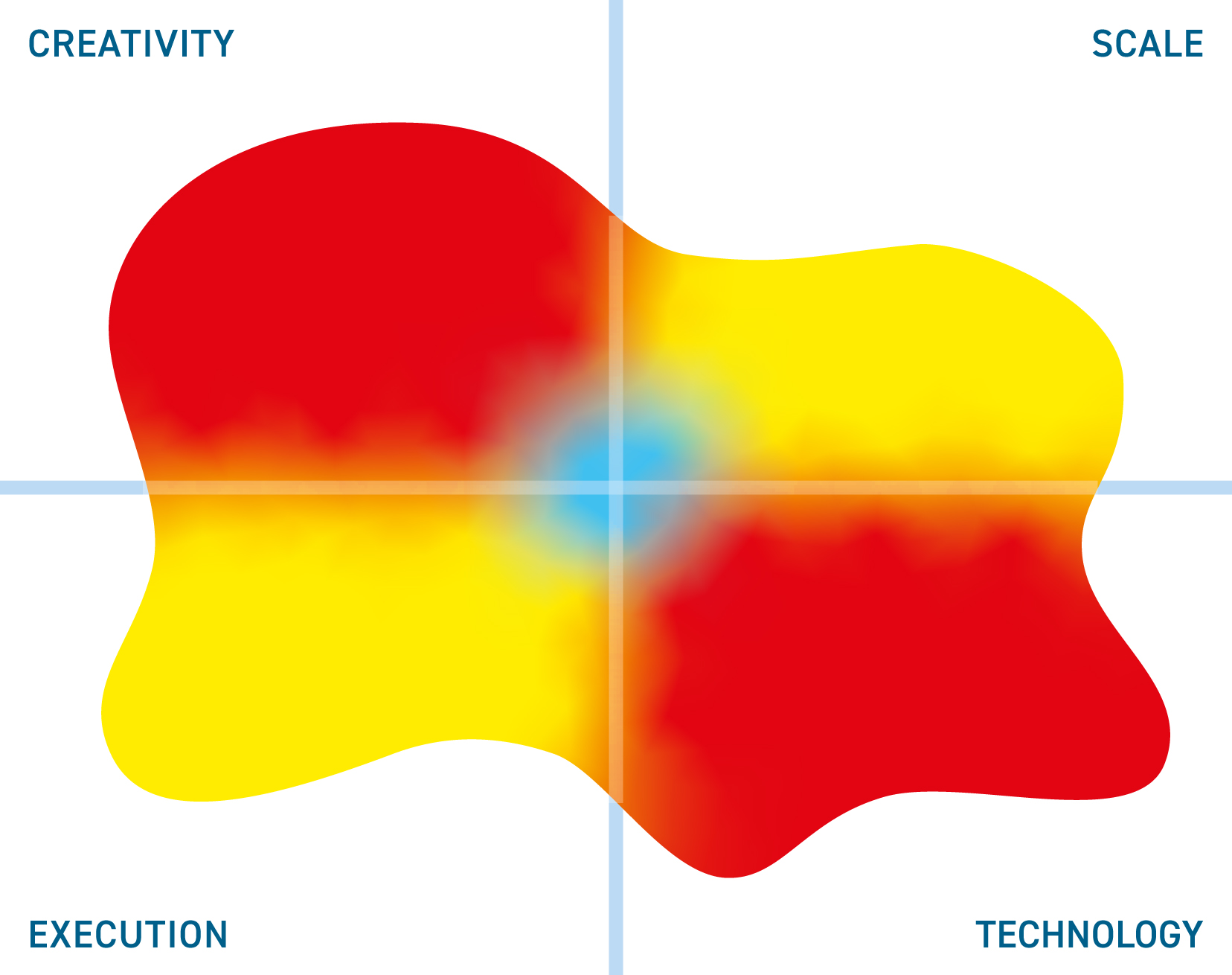

Historically, Vaticle’s solutions have found most success within the life sciences, telecommunications, and financial services sectors. That said, it is not exclusive to these industries. It is also notable that the company has found success with users migrating from other RDF graph databases, who have found the semantics of these environments too complex.

Commentary

Coming soon.

Solutions

Research

Graph Databases (2023)

We discuss segmentation, movement, and trends within the graph market. We also include a comparative study of several graph products.

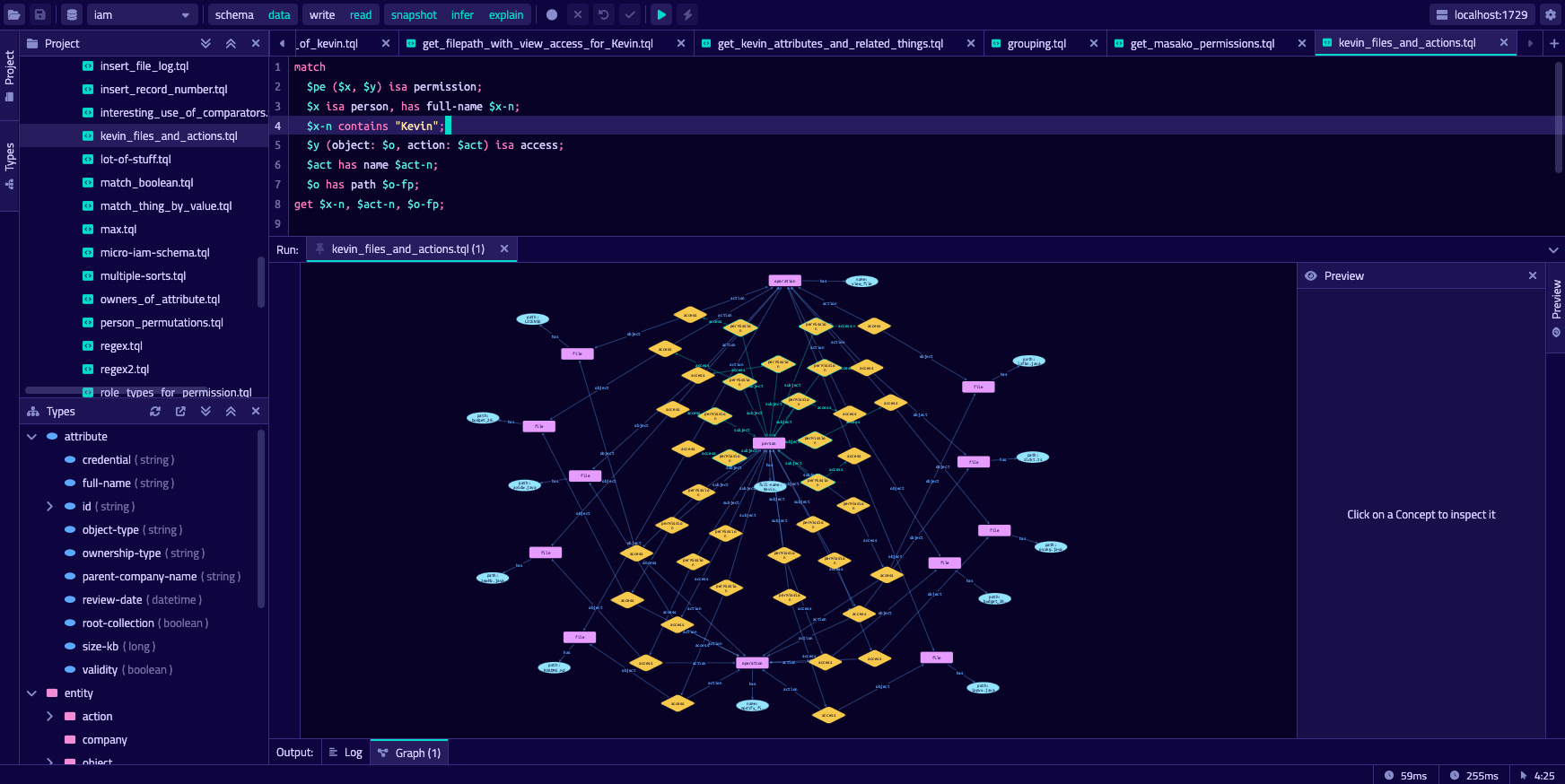

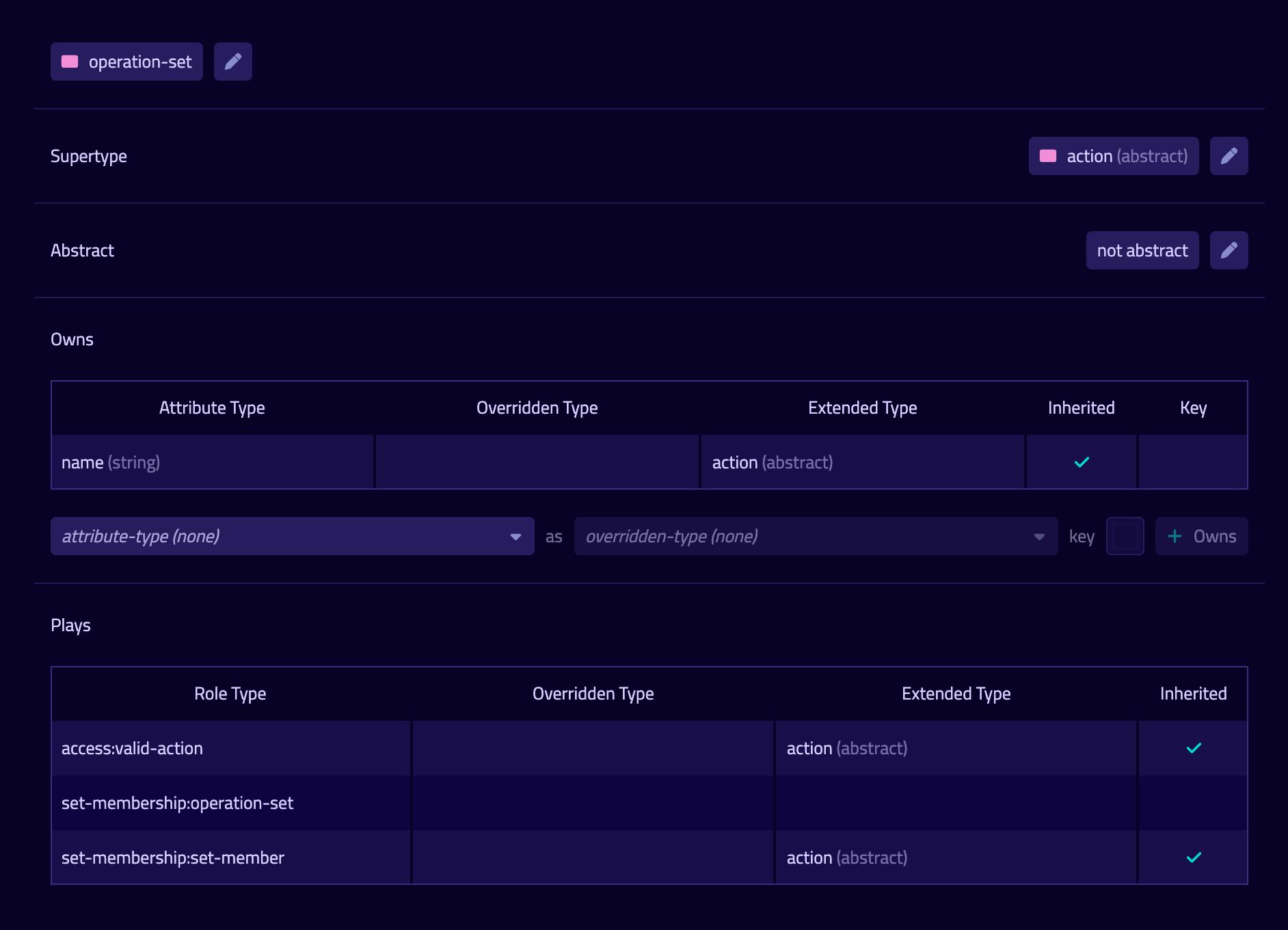

Vaticle TypeDB

TypeDB is an open source, freely available, strongly typed graph database. Vaticle, the company behind it, offers commercial licensing and enterprise support.

Graph Database (2020)

This is Bloor's fourth Market Update in this space, which discusses the state of the graph database market as of early 2020.

Grakn Core and Grakn KGMS (2020)

Grakn consists of a database, an abstraction layer and a knowledge graph, which is used to organise complex networks of data and make them queryable.

Data Assurance

This report is about assuring the quality and provenance of your data from both internal and external perspectives.