Datactics

Last Updated:

Analyst Coverage: Philip Howard and Daniel Howard

Datactics is a data quality software vendor based in Belfast. It was established in 1997 and has nearly 50 employees. It markets itself primarily to the financial sector, and accordingly its client list contains a number of major banks and information vendors, including internationals like ING and UBS.

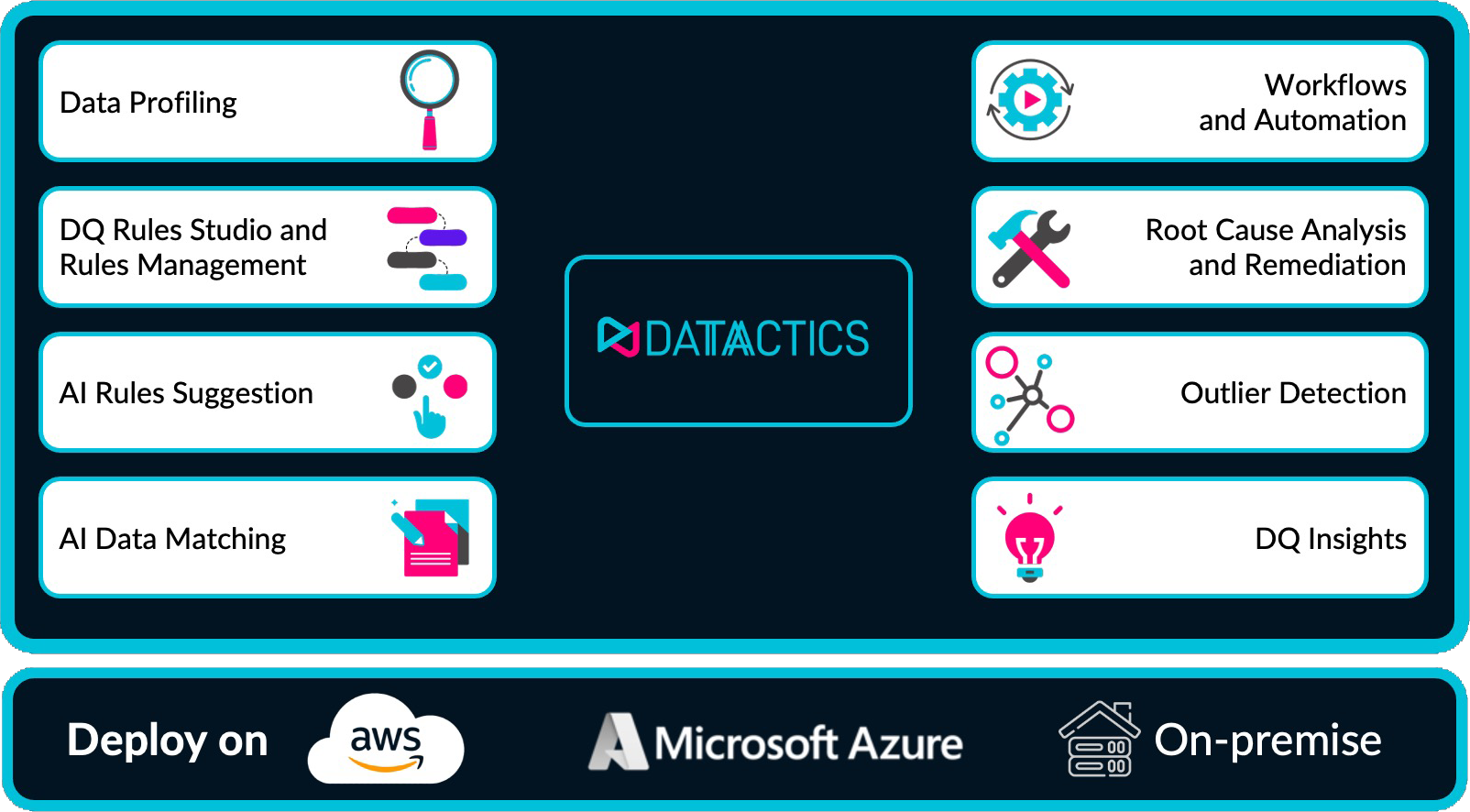

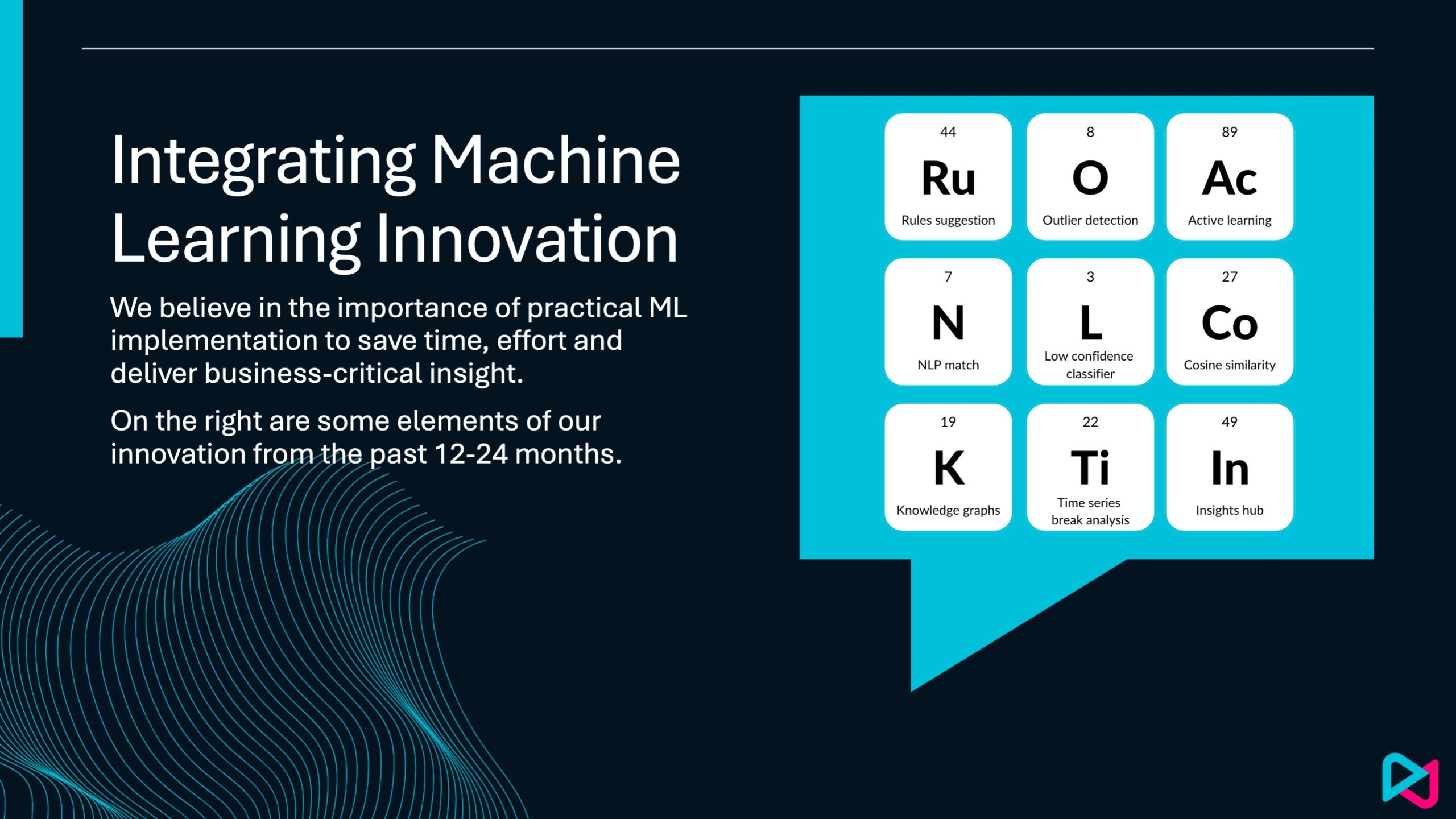

The company’s namesake product is a data quality platform oriented around self-service. It offers solutions for data quality, data matching, and single customer view, and puts particular emphasis on supporting these solutions with highly transparent and explainable AI and machine learning capabilities. This allows you to benefit from the advantages of AI while ensuring that it remains accountable, comprehensible, and trustworthy.