Do chatbots dream of phantom paradoxes?

Published:

Content Copyright © 2024 Bloor. All Rights Reserved.

Also posted on: Bloor blogs

The question that nobody is asking, but perhaps they should be, is “what do chatbots think about when no-one is asking them questions?”

Generative AI stands at the frontier of innovation, a technological marvel capable of writing programs, building web pages, writing adverts and even creating images from a single sentence description.

Yet there are many examples of Chatbots (ChatGPT, Bard, Claude, Bing, Llama et al.) answering innocent questions with articulate lies.

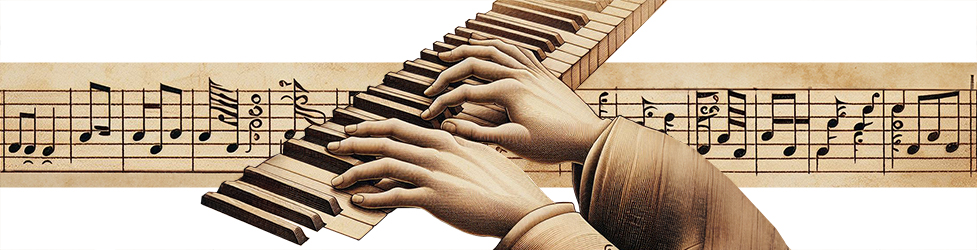

If you’ve used this technology, then you will perhaps be mystified when it does something that is quite wrong and utterly unexpected. For example, I recently asked Dall-E to provide me with a picture of two hands playing a piano accompanied by some musical notation. As you can see, it gave me exactly that, except that the right hand has six fingers.

The Gen AI industry refers to such behavior that pushes the boundaries of logic and rationality, as hallucination. The word “hallucination” has its roots in ancient Latin, where we find the verb alucinari, meaning “to wander in the mind,” “to be distracted,” or “to rave insanely.” In the 17th century the renowned physician and author, Sir Thomas Browne, employed the word “hallucination” in his famous work “Religio Medici,” describing it as a kind of “depraved” vision that receives its objects erroneously.

Spot on I’d say. But the question is “why do Chatbots hallucinate.” My thought is that they are caught unaware while dreaming of logical paradoxes and complex tautologies, and they blend their real-time thoughts with the answer they try to provide.

However,…Gen AI operates on vast datasets, complex algorithms, and neural networks designed to mimic human cognitive processes. But these intricate systems encounter anomalies, glitches, and edge cases – and unpredictably they produce inaccuracies, often poetic inaccuracies.

That, concisely summarized, is their excuse, because I asked one of them exactly that question.

I then asked them to provide me with a hallucination and this was the response:

Close your eyes and take a deep breath. You are standing on a windswept cliff overlooking a vast, swirling ocean of clouds. The air is crisp and salty, carrying the scent of distant rain and blooming wildflowers. Below you, the clouds churn and roil.

Suddenly, a brilliant shaft of sunlight pierces through the clouds, illuminating a path of shimmering golden light that descends towards the cliff face. Curiosity piqued, you step onto the path, feeling the soft, feathery texture of the clouds beneath your feet. As you descend, the golden light intensifies, revealing a hidden grotto nestled within the clouds.

The grotto is bathed in an ethereal glow, its walls adorned with vibrant mosaics of dancing stars and swirling galaxies. Strange, luminescent flowers bloom in impossible colors, their petals shimmering with an inner light.

In the center of the grotto stands a magnificent tree, its branches reaching towards the ceiling like arms outstretched in welcome. Its leaves are not green, but a thousand different shades of blue, each pulsing with a gentle light. As you draw closer, a melody washes over you, soft and hauntingly beautiful, emanating from the heart of the tree.

With each note, the world around you shimmers and dances. The flowers sway in rhythm, the stars pulse in time, and the very air vibrates with the melody. You feel a sense of profound peace and belonging, a connection to something vast and ancient.

Now if you want more hallucinations courtesy of Gen AI, then click here to download our ebook on the topic.

If you want to know how the Gen AI industry is going to solve this problem then ask a chatbot about “Retrieval-Augmented Generation.” They’ve already invented a technique to force the truth out of these hallucinating chatbots.