Is Dataiku the key to unlocking Gen AI’s potential?

Published:

Content Copyright © 2023 Bloor. All Rights Reserved.

Also posted on: Bloor blogs

Imagine a Gen AI-driven platform where your data confesses all its insights, where the code writes itself, and where creativity dances cheek to cheek with automation. It doesn’t exist, at least not yet, but if it ever does burst into the AI market, it may emerge from the software labs of Dataiku. Since its genesis in 2013, Dataiku has established itself as a leading player in the ML and AI market, delivering an end-to-end AI platform that streamlines the AI lifecycle, from data preparation to effective deployment.

With the advent of Gen AI, Dataiku was one of the first companies in its field to seize the initiative. Gen AI could legitimately be described as a “whole new ball game,” but it was a ball game nevertheless: it required a data service similar to that required by ML and other varieties of AI and BI.

The LLM Mesh

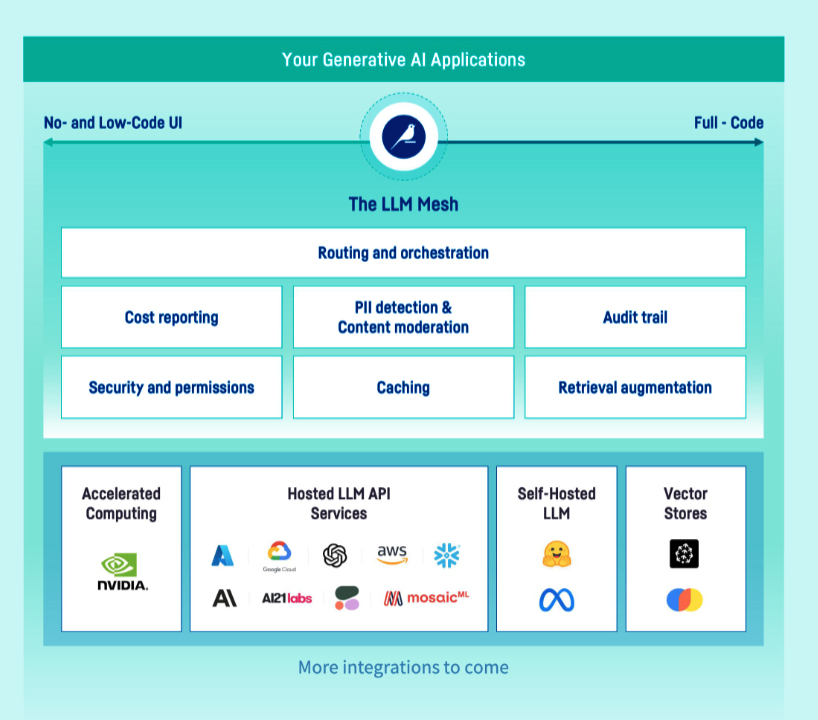

As far as we can tell, Dataiku seeks to provide the same robust data service to Gen AI Large Language Models (LLMs) that it already provides to other species of AI. The diagram below represents Dataiku’s LLM Mesh, which you can think of as a meticulously engineered bridge that serves one or more operational LLMs, providing them with seamless communication, resource sharing, and collaborative capability.

Roughly described, the LLM Mesh Components have the following functions:

Routing and Orchestration:

Think of the LLM Mesh as having an up-front traffic controller. Incoming tasks are analysed based on factors like model expertise, workload distribution, and resource availability and directed to the appropriate LLM. The LLM Mesh is an architecture for multiple tasks. Complex workflows involving multiple LLMs can be managed with data flowing smoothly between each stage. Think, for example, of sentiment analysis feeding into personalised chatbot responses, orchestrated by the LLM Mesh.

Cost Reporting:

Managing LLMs can be a financial black hole and will inevitably prove to be so for some early adopters. The LLM Mesh seeks to button this down, throwing light on resource usage and providing detailed cost reports that track expenditure per LLM, project, and even specific tasks. This is not just about cost control, it’s also about optimising the Gen AI investments.

Audit Trail:

Every action in the LLM Mesh leaves a digital footprint. An audit trail meticulously records all data flow, model usage, and user interactions. This not only enhances compliance with regulations – a known pain-point for some Gen AI implementations – but also allows for troubleshooting and performance analysis.

PII Detection and Content Moderation:

PII detection is necessary for governance and regulatory reasons. Incoming data is screened for PII before entering the LLM Mesh, preventing sensitive information from reaching models altogether. The Mesh also continuously monitors data flow to identifying any PII that might slip through pre-processing check. The LLM Mesh also guards against unintentional data leaks, moderates for harmful content. LLMs can be trained to detect offensive or hateful language, proactively identifying potentially harmful outputs.

Security and Permissions:

The LLM Mesh is fortified with robust security protocols, including access controls, data encryption, and intrusion detection systems. Granular permissions ensure only authorised users can access specific models and data sets.

Caching:

The LLM Mesh implements a caching strategy so that repeatedly used data is retained to feed repeated LLM activity. This helps to reduce compute costs, improve responsiveness and boost scalability.

Retrieval Augmentation Generation (RAG):

Finally, the LLM Mesh fully supports RAG. This has rapidly become a necessary capability for LLM implementations because of both the frequently reported “LLM hallucinations” and because of LLM inaccuracy. (LLMs do not think in terms of “true and false”.) Dataiku implements RAG, which corrects these defects.

The way this works is that when any input is submitted, to the LLM a query is also submitted to the RAG capability which then searches through reliable internal or external data sources, for data. For example, if the input requests writing a product description, the RAG capability searches through product specifications, reviews, competitor comparisons, and so on. The retrieved information is then “injected” into the LLM’s context window, providing it with richer context and additional knowledge.

Armed with this augmented knowledge, the LLM generates a more accurate and relevant product description, mentioning key features, addressing customer concerns, and even incorporating current market trends.

The benefits of this go a little beyond reducing “hallucinations” and factual errors. The final output will likely be more creative and more relevant than it might otherwise have been.

Integrations

The layer at the bottom of the diagram shows Dataiku’s current integrations with popularly used Gen AI components. The number of technology integrations, already fairly extensive, will likely grow in the coming months.

In Summary

Dataiku, a leading player in the ML and AI market, has swiftly embraced Gen AI and engineered a well-design LLM data platform.

Dataiku’s LLM Mesh is a well-designed bridge that facilitates seamless communication, resource sharing, and collaboration for Large Language Models (LLMs). Thus, Dataiku now provides the same kind of robust data service to LLMs as it does to other species AI. Organisation who are considering adopting Gen AI would be wise to investigate Dataiku’s capabilities.