Big Software

Last Updated:

Analyst Coverage: Paul Bevan

Basically, Big Software represents the start of a new technological era in which each of the major capabilities at the heart of contemporary cloud and data centre operations can be created and managed as software– data storage as software, data processing as software, data networking as software. Given a data management compute landscape (storing, processing, networking) that is a single continuum of software, innovative capabilities are now being developed to further transform data management operations and, in turn, further transform the delivery of the mutable enterprise.

Figure 1 – ACG Research predicts 50% TCO reduction for Network Service Providers utilising SDN over the present mode of operation..

Why is it important (hot)?

We believe this new era of Big Software is bringing reduced costs, faster speed to market and greater business agility.

From storage, to network switches and even load balancers clever software has abstracted the data plane from the control plane. This means that you can use much cheaper industry standard server hardware to run functions that previously relied on sophisticated (expensive) integrated proprietary hardware and software solutions from major suppliers. As a good example, software defined load balancers can be implemented at the same cost as just the maintenance costs of the hardware solutions they replace.

In applications development, software automation is enabling continuous development and deployment capabilities. Serverless computing allows your developers to concentrate on the application rather than worrying about different builds for different target servers. And low-code/no-code development environments allow business functions to be translated into programming languages. All this means that you can keep ahead of your competitors by bringing new applications to market faster and more often.

Gone are the complex issues of systems integration. The development of micro-services, their use and re-use, the widespread use of open and industry-standard APIs (Application Programming Interface) and the burgeoning use of containers, such as Docker, make it easy not only to build and deploy new apps, but also take them down and reconfigure them very quickly. This enables IT to react at pace to changes in the business environment.

How does it work?

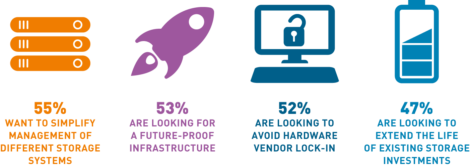

Figure 2 – In 2017 a Gartner survey asked “What are the business drivers for implementing software-defined storage?”

Fundamentally Big Software is about abstraction. All hardware in compute infrastructure requires very low-level machine code to make it run. Through the process of abstraction, a programmer hides all but the relevant data about an object to reduce complexity and increase efficiency. Historically the instructions about what you want a piece of hardware to do resided in firmware (basically a combination of some software instructions specific to a piece of hardware). This made infrastructure upgrades time-consuming, costly and often made it difficult to switch vendors.

Now, abstraction has enabled the development of software-based controllers for storage and a wide range of networking equipment. This makes it possible to configure, and reconfigure, your infrastructure without worrying about the underlying hardware, thereby improving IT agility. This abstraction also allows the software to utilise industry standard hardware to perform a range of different tasks, again increasing agility, but also reducing costs.

The debate for Enterprise CIOs will revolve around traditional questions of buy vs build. Vendors have been building software defined capabilities into packaged hardware and software offerings known as Hyper-Converged Infrastructure (HCI). These offer a level of cost reduction, but also simplified implementation and management. Lower costs might be achievable by using a best of breed tool approach and a framework such as Openstack. This needs to be set against the skills required to build and implement systems using a Lego Brick approach.

A 2015 report from Deloitte showed that, for their Fortune 50 clients, moving eligible systems to a Software Defined Data Centre (SDDC) can reduce spending on those systems by approximately 20%. They further stated that these savings could be realised with current technology offerings and may increase over time as new products emerge and tools mature. Individual Software Defined Storage (SDS) and Software Defined Networking (SDN) implementations are seeing TCO savings of around 50% over traditional approaches. Add to this the advantages of agility and faster deployment of new applications and the business case becomes compelling.

Finally, as you can see from the quote from Citi, businesses have already started to implement these technologies and get benefit from them. According to Gartner, by 2020 the programmable capabilities of an SDDC will be considered a requirement by 75% of Global 2000 Enterprises.

Quotes

“53% cost reduction over 5 years for 500TB Software Defined Storage over traditional NAS solution.”

IDC “The Economics of Software Defined Storage”

“By introducing commodity infrastructure underneath our software-defined architectures, we have been able to incrementally reduce unit costs without compromising reliability, availability and scale.”

Greg Lavender, Managing Director, Cloud Architecture and Infrastructure Engineering, Office of the CTO, Citi

Big Software covers a very wide area of virtualisation and abstraction across compute, storage and network technologies. We expect to see large, well established vendors continue to cover many, if not all, areas. Often, they have achieved this through (multiple) acquisitions, and have built their capabilities into packaged hyper-converged solutions.

Some specialists have taken leading positions in software defined storage and hyper-converged solutions and have remained independent through building strategic partnerships with major vendors. Others will emerge and grow by focusing on particular niches, or technologies.

In networking there is a mix of pure-play start-ups, traditional hardware vendors shifting towards SDN, and telcos, vying for market leadership.

The major operating system vendors have used their positions at the heart of I.T. infrastructure to build out significant software defined capabilities across the technology stack. With the introduction of containers, we see the Hyper-scale providers playing key roles key roles along with newer container and orchestration companies.

Finally, we expect to see Big Software enabling a more holistic approach to infrastructure performance management and even, perhaps, the emergence of cloud-based performance SLAs.

What is the bottom line?

Big Software delivers real competitive advantage through significantly lower IT infrastructure costs and the ability to deploy more new business applications faster.

Commentary

Solutions

These organisations are also known to offer solutions:

- Alcatel-Lucent

- Avi Networks

- Big Switch Networks

- Canonical

- Citrix

- Coraid

- Cyan

- Dell

- Docker

- EMC

- Fujitsu

- HP

- IBM

- Juniper

- Kubernetes

- Microsoft

- NetApp

- Nutanix

- Quanta Cloud Technology

- Red Hat

- Stratoscale

- Virtana

- VMware Carbon Black