TIBCO transforms big data into big opportunity

Published:

Content Copyright © 2013 Bloor. All Rights Reserved.

Also posted on: Accessibility

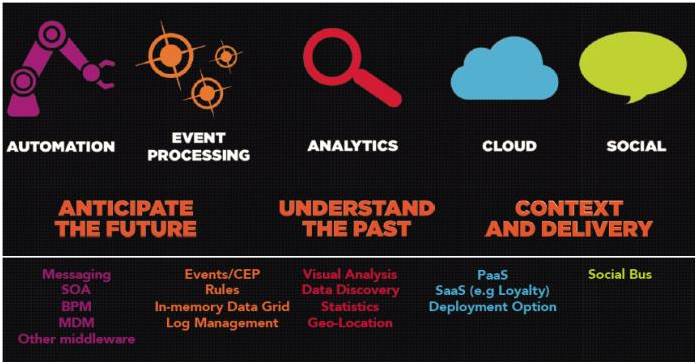

TIBCO came to London for their user conference (transFORM2013). This year’s theme was all about big data and TIBCO’s senior executives outlined their strategy for their platform. The proceedings opened with Matt Langdon, TIBCO’s SVP Strategic Operations, setting the scene. Big data has come to mean many things to many people and there is has been a lot of hype and mythology about the subject, so Langdon’s job was to expand on what it means to TIBCO – understand the past and you can better anticipate the future. Big data involves both ‘data at rest’ (transactions) and also events. Langdon then went on to place the TIBCO’s grouping of their products against this view of big data (see Figure 1).

Figure 1: TIBCO’s Platform for Big Data (Source: TIBCO)

Langdon showed how three of TIBCO’s customers were using their products to create business opportunities. Lloyds Banking Group was using the logs from their 700 million online transactions to understand customer behaviour to drive customer satisfaction and troubleshoot problems as a way of maintaining the brand. Macy’s were collecting data from all their different sales channels to enable them to provide better service by providing information to either sales staff on the shop floor or to the online customer to up-sell and cross-sell. MGM Resorts International is using TIBCO’s Loyalty Lab to run their loyalty programme and to push information to their loyalty customers when on their premises.

What’s driving TIBCO

Murray Rode, TIBCO’s Chief Operating Officer, reported that company had revenues of $1.025 billion for the last fiscal year and the company’s strategy was to deliver a platform to enable enterprises to be event-driven so as to enable the two-second response (Ranadive – TIBCO’s founder and CEO – “a little bit of the right information a couple of seconds or minutes in advance, it’s more valuable than all of the information in the world six months after the fact”). Rode listed the added capabilities to their product portfolio over the last eighteen months:

- Automation – low-latency appliances, connectivity and manageability;

- Event processing – machine data, extreme volumes and streaming;

- Analytics – performance increased by 10, new statistics engine, new sources and mapping;

- Cloud – the release of “Cloudbus” , iPaaS and Loyalty re-architected;

- Social – more devices supported increased integrations and user experience.

Some interesting statistics were then given that shows TIBCO have been diversify from their strong finance base:

Financial Services | 25% |

Telecommunications | 15% |

Government | 10% |

Energy | 10% |

Life Sciences | 7% |

Insurance | 5% |

Logistics | 5% |

Retail | 5% |

Rode outlined the goals of the company as to create and sell a platform to enable solutions for a changing world, while leading strategic product segments to stay nimble and innovative as they grow to a multi billion dollar business. These goals translate into a platform strategy of doubling-down on integration, making analytics ubiquitous and furthering TIBCO’s cloud offering, supported by a marketing strategy based on expanding their presence in core markets and growing in GEO areas in Latin America, South Asia and Eastern Europe. TIBCO will foster their strengths in core verticals (Finance, Telco, Energy, Manufacturing, Life Sciences), whilst building in Government, Insurance, Logistics, Media, and Retail verticals. TIBCO will continue to diversify their product portfolio and enable solutions. To conclude his section of the opening proceedings, Rode gave an overview of 4 products.

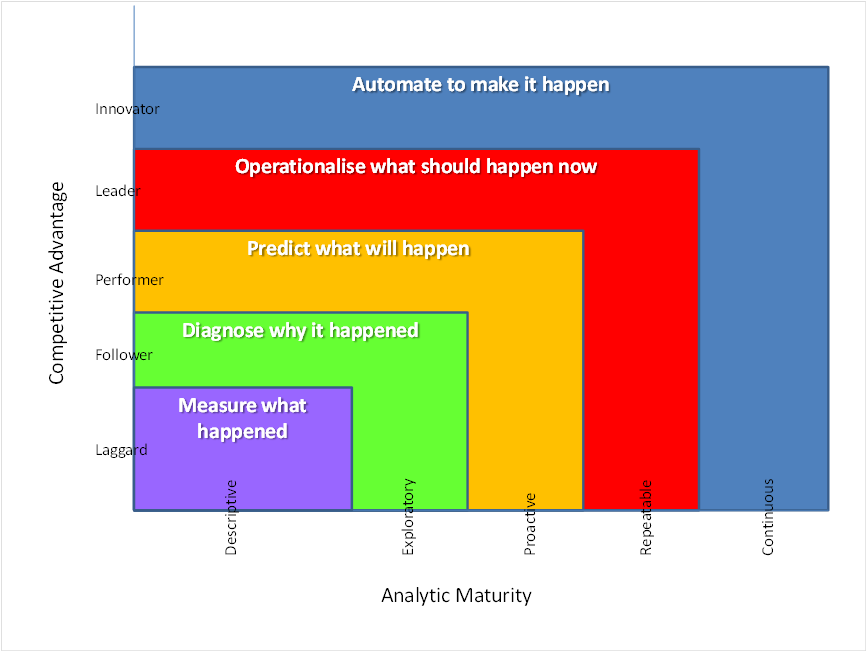

Analytics – understanding your maturity

The first of these was TIBCO Spotfire, their analytical engine. Before talking about the product, Rode introduced an Analytical Maturity Model, as shown in Figure 2. A number of companies, including IDC and IBM, have produced their own analytics maturity model. Like all maturity models in the past this one provides a means of organisations measuring where they are. The bottom level is where individuals or departments within an organisation analyse their own data, typically using spreadsheets or basic query tools. There is only a limited historical view into past performance and therefore knowledge workers must rely in part on ‘gut feel’ in making business decisions. At the next level, individuals or departments within an organisation are able to diagnose what has happened. To achieve this there has to be more collaboration across the whole organisation with the ability to use both historical and current views of data, as well as trending for past and future time periods. Decision makers may use dashboards or scorecards to drill down or sum up complex information quickly. At the third level, organisations are proactive and have the ability to predict what is likely to happen. To do this data has to be able to be combined from various systems to achieve a cohesive view of conditions. The integrated planning allows the alignment of resources so that predictions can be made about future outcomes and behaviour. The next stage sees organisations having the ability to understand what should happen when an event occurs and to start the process of automating that process to achieve the final level; what TIBCO call an “Innovator”.

Figure 2: TIBCO’s Analytic Maturity Model (Source: TIBCO)

What TIBCO have done with the release of Spotfire 5.5 in March 2013 is to provide a single environment to support organisations as they mature through the levels. Spotfire 5.0 had seen the product completely re-architected to handle large volumes of data. Spotfire 5.5 supports rule-based visualizations, contextual highlighting, ‘visual joins’ for heterogeneous data mashup (30 different databases can be integrated), and map visualisations. This latter capability is provided through MAPORAMA, a product TIBCO acquired at the end of April 2013.

Supporting stream-based event processing

On June 11th 2013, TIBCO announced the acquisition of StreamBase Systems. StreamBase enables organisations to build, test and deploy real-time applications for streaming big data. So what is stream-based event processing? Event processing is a method of tracking and analysing streams of information about things that happen (i.e. events) and deriving a conclusion from them. StreamBase was founded some 10 years ago and the product basically uses pattern analysis on event-driven processes. LiveView, its user interface, uses a push-based model and provides support for alerts and notifications with ad hoc queries. With StreamBase now in its stable, TIBCO are offering 3 different types of support for complex event processing:

- Stream – aimed at supporting financial trading processing covering market data feed handler, liquidity detector, execution algorithms, smart order router and order book;

- Standard – aimed at supporting track and trace covering risk and compliance, sense and respond covering real-time promotions, and situational awareness covering transport document delivery scenarios; and

- Extreme – aimed at payment processing and telecoms gateway type scenarios.

Integrating the platform to create solutions

Matt Quinn, TIBCO’s Chief Technology Officer, closed the session by asking the question What drives technology evolution? The rapidity with which technology is evolving presently is just incredible. Quinn stated, “For a long time the technology arms race drove investments. This has been replaced by a focus on simplicity. No organisation is a green field in terms of technology and therefore we all have to pay interest on this debt every day. Just like when you spill red wine on a white carpet, some stains never come out. Simple does not replace complexity; rather it hides it from the business user. We have now reached a point where business people are technology-aware and IT people have at last become business-aware.” This is what is driving TIBCO’s product portfolio development.

The cloud, in Quinn’s view, is inevitable but there is a need for a simple way of interfacing between systems in the cloud and on premise. To cater for this requirement, TIBCO have introduced TIBCO Cloud Bus which provides an integration platform as a service (iPaaS) to integrate cloud and social applications in an organisation.

TIBCO announced Iris, a new software product designed to deliver application troubleshooting and forensic capabilities. Iris is a standalone solution to consume process and view results for application and machine log data to help troubleshoot and analyse enterprise applications. Gratovich stated. “With TIBCO Iris, we are complementing our ability to collect and manage massive amounts of log data with a self-service solution that will become indispensable to application developers and security experts who are charged with keeping systems running and companies safe.”

Another new announcement made was for the latest version of its messaging platform, TIBCO FTL 3.1. This new version includes a new distributed in-memory persistence engine that maximises performance, with guaranteed message delivery and a messaging throughput of over 850,000 messages per second. Quinn stated that TIBCO FTL 3.1 could be leveraged by any industry facing increasing data volumes, such as logistics and transportation companies, government, power and utilities companies and the high-tech manufacturing industry.

There was a lot to digest from all the announcements and the positioning by TIBCO at Transform. There message concerning big data not only plays to their strengths but also shows they have understood the real issues faced by organisations that have to process all types of data but in context, i.e. within business processes. It will be interesting to see if TIBCO can achieve their business objectives and deliver their strategy over this year.