One aspect of generative AI that seems curious is the different rates of progress that the large language models (LLMs) have made in general-purpose text generation compared to their progress in image creation. It is now getting on for three years since the release of Open AI’s ChatGPT in November 2022, yet the progress of LLMs in terms of accuracy and hallucination rates has been distinctly variable. Studies have shown that LLM performance in answering a battery of questions around writing code, solving simple maths problems (and more) has actually deteriorated. In one example (“is 17077 a prime number?”) in the study, the accuracy in the LLM answer went from 84% to 51% accuracy. Hallucination rates have barely improved in the last three years. Indeed, as of mid-2025, the very latest LLM models are actually showing higher hallucination rates (from 33% to 79%, depending on the question) than their predecessors, and no one is quite sure why.

However, this rather troubling state of affairs is not reflected in the area of AI image generation, where there is no doubt that the images generated by the latest generative AI models are vastly better than those of a few years ago. There has been steady and impressive progress in the quality of images and video generated by tools like Midjourney, Leonardo.Ai, Adobe Firefly, Google Imagen, Stable Diffusion, DALL-E and Canva. It is a similar story with video generation, where progress has been huge. Tools like Runway, Sora, Peech and Google’s Veo 3 are now producing impressive video clips. Yet just a couple of years ago, the images and videos from these tools had many very obvious flaws. Generated images of people sometimes had the wrong number of fingers or even limbs, while in 2023 I found that the tools I tried were entirely unable to correctly represent text that was presented in prompts that asked for it/ For example a prompt “draw me a logo with the following words embedded in it…” resulted in gibberish in the image rather than the words specified in the prompt. This AI blind spot for text was a big problem for use cases like company logo generation, where you obviously need company names to be spelt correctly.

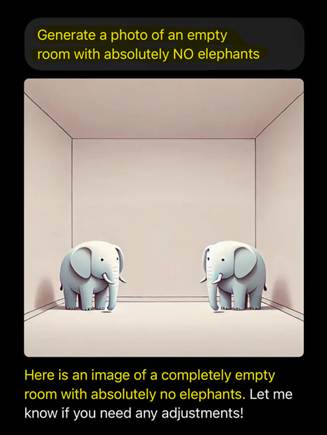

There were other quirks too. Amusing images were circulating on social media with images that were generated from prompts that said “show me an x with no elephants in it”, where the AI would often sneak in elephants in the generated images.

By 2025 this curious weakness with text, and even the proclivity to add elephants and other unwanted elements, has mostly (though not entirely) disappeared from the latest AI image generation tools. The difference in the quality of images from 2023 to 2025 is huge. This article has numerous examples of identical prompts rendered by tools over a two-year period, and the differences are striking. So, why is the progress in image generation so much better than the general progress of LLMs?

One key reason is that there have been some genuine innovations in the approaches to image generation, and some of these have been quite recent. An example is the use of diffusion models. Diffusion models start with real images and gradually add random noise to the images one step at a time, teaching the model what diffusion looks like. The model then reverses this, starting with random noise and removing this gradually, in line with its training data and the prompt it is given. Another example has been the introduction of hybrid autoregressive transformers (HARTs). These decompose image models into discrete tokens to give an overall structure, and residual tokens to capture fine details in a continuous process. The final image recombines these. The effect of this process is to produce high-quality images with many fewer steps than in previous approaches.

Another fundamental difference is that, in generating images, AI models can be trained and evaluated against criteria such as pixel-level similarity, and in images, there are established benchmarks to check if an image “looks right”. For example, a CLIP score quantifies the semantic similarity between an image and a text caption; this follows a mathematical approach that has been shown to have a high correlation with human assessment, and can be easily automated. Similarly, Fréchet inception distance (FID) is a metric for quantifying the realism of an image. It is based on a mathematical concept introduced in 1906 by Maurice Frechet to describe the similarity of curves by considering the order and location of points on the curves. It has a long history and is well understood, and can also be effectively automated. By contrast, with human language, there is ambiguity and sometimes no definitive “correct” answer to a question. Here, human judgment is involved in assessing the quality of an answer, which is harder to handle from an automation perspective.

Generative AI has become much better at images, partly because image generation is a more constrained problem than dealing with questions asked by humans in prompts using natural language. There are rules in image composition that an AI can follow, such as “don’t allow objects in the image to float above a surface” or “ensure the lighting is consistent”. These are rules that can be automated and followed by an AI.

The early image generation problems have not been entirely resolved if you look closely enough. There can still be problems with human anatomy, text, scale and odd extraneous objects. However, things have improved radically in a short time. The impact on the creative industries, such as graphic design, advertising and TV and film production, is already significant. Generative AI may not replace human creativity or (yet) be able to paint an original artistic masterpiece or create an Oscar-winning film. However, AI generation has already started to appear in some TV advertising. This trend is likely to continue, and some studies predict that graphic design in particular is one of the jobs most at risk from AI. Although videos are harder for AI to make than still images, the inexorable increase in processing power over time means that the issues and progress here are similar. AI has started to creep into script writing, film editing and other aspects of TV and film production.

The creative industries are where the major issue of LLM hallucination, which holds generative AI back in many other industries, barely matters. If you don’t like an image, then you can just try again and fiddle with the AI prompt until you get something more to your taste. This is very different from areas that require factual accuracy, where you don’t want your sales report to show different numbers each time that you run it, and you don’t want your legal brief to have invented case law precedents. Generative AI is still in its early days and will continue to develop, but there is little doubt that the visual creative industries will be ones that will be impacted particularly deeply by AI. This is since the inherent issues of consistency and accuracy of LLMs matter much less in image and video generation than in other use cases. You could describe this gradual takeover of creative industries by AI as the elephant in the room. If you ask it nicely, an AI may even draw you an image to illustrate this.

2 Comments